Ongoing developments in artifical intelligence, particularly in AI linguistic communication, will affect various aspects of our lives in various ways. We can’t foresee all of the uses to which technologies such as large language models (LLMs) will be put, nor all of the consequences of their employment. But we can reasonably say the effects will be significant, and we can reasonably be concerned that some of those effects will be bad. Such concern is rendered even more reasonable by the fact that it’s not just the consequences of LLMs that we’re ignorant of; there’s a lot we don’t know about what LLMs can do, how they do it, and how well. Given this ignorance, it is hard to believe we are prepared for the changes we’ve set in motion.

By now, many of the readers of Daily Nous will have at least heard of GPT-3 (recall “Philosophers on GPT-3” as well as this discussion and this one regarding its impact on teaching). But GPT-3 (still undergoing upgrades) is just one of dozens of LLMs currently in existence (and it’s rumored that GPT-4 is likely to be released sometime over the next few months).

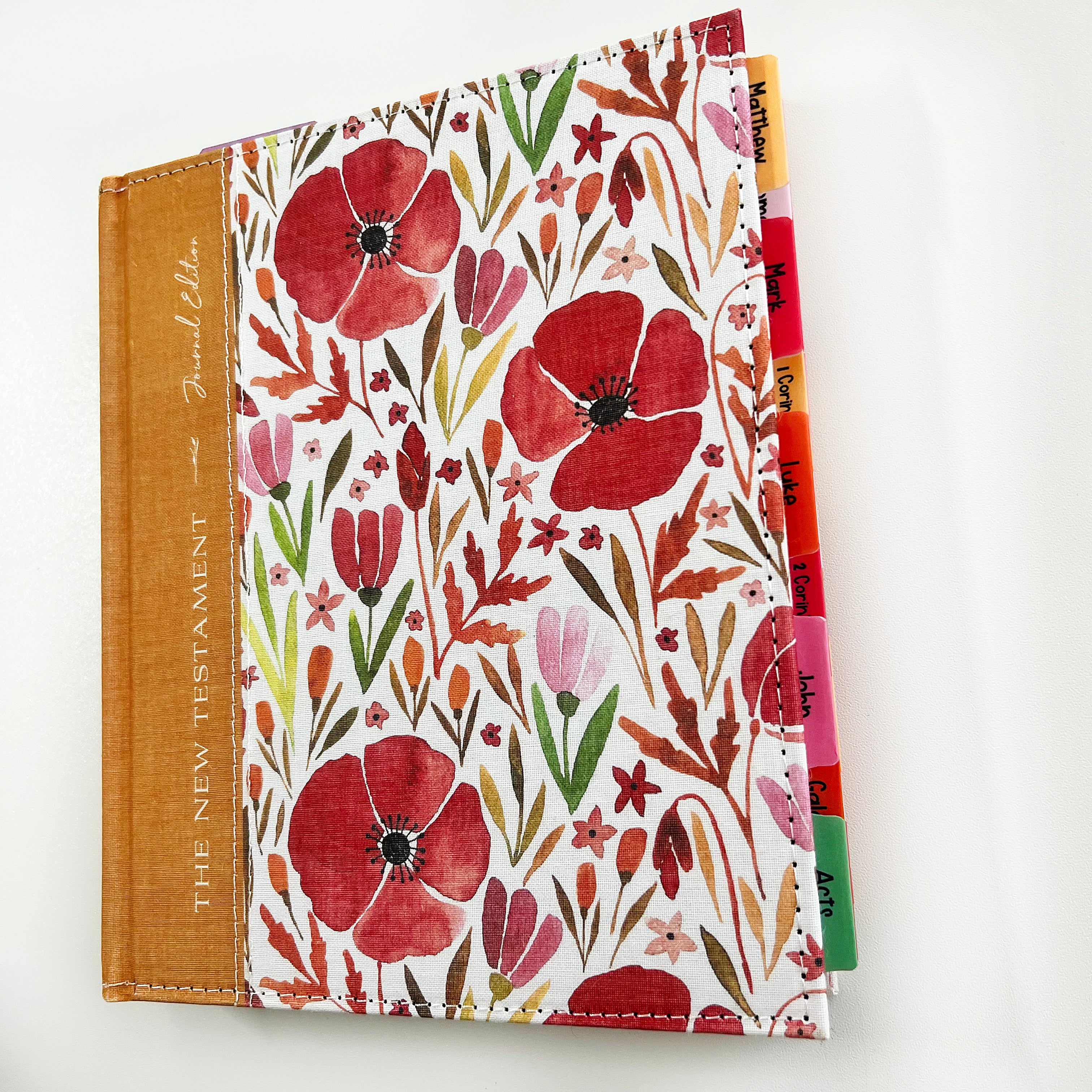

[“Electric Owl On Patrol”, made with DALL-E]

The advances in this technology have prompted some researchers to begin to tackle our ignorance about it and produce the kind of knowledge that will be crucial to understanding it and determining norms regarding its use. A prime example of this is work published recently by a large team of researchers at Stanford University’s Institute for Human-Centered Artificial Intelligence. Their project, “Holistic Evaluation of Language Models,” (HELM) “benchmarks” 30 LLMs.

One aim of the benchmarking is transparency. As the team writes in a summary of their paper:

We need to know what this technology can and can’t do, what risks it poses, so that we can both have a deeper scientific understanding and a more comprehensive account of its societal impact. Transparency is the vital first step towards these two goals. But the AI community lacks the needed transparency: Many language models exist, but they are not compared on a unified standard, and even when language models are evaluated, the full range of societal considerations (e.g., fairness, robustness, uncertainty estimation, commonsense knowledge, disinformation) have not be addressed in a unified way.

The paper documents the results of a substantial amount of work conducted by 50 researchers to articulate and apply a set of standards to the continuously growing array of LLMs. Here’s an excerpt from the paper’s abstract:

We present Holistic Evaluation of Language Models (HELM) to improve the transparency of language models.

First, we taxonomize the vast space of potential scenarios (i.e. use cases) and metrics (i.e. desiderata) that are of interest for LMs. Then we select a broad subset based on coverage and feasibility, noting what’s missing or underrepresented (e.g. question answering for neglected English dialects, metrics for trustworthiness).

Second, we adopt a multi-metric approach: We measure 7 metrics (accuracy, calibration, robustness, fairness, bias, toxicity, and efficiency) for each of 16 core scenarios to the extent possible (87.5% of the time), ensuring that metrics beyond accuracy don’t fall to the wayside, and that trade-offs across models and metrics are clearly exposed. We also perform 7 targeted evaluations, based on 26 targeted scenarios, to more deeply analyze specific aspects (e.g. knowledge, reasoning, memorization/copyright, disinformation).

Third, we conduct a large-scale evaluation of 30 prominent language models (spanning open, limited-access, and closed models) on all 42 scenarios, including 21 scenarios that were not previously used in mainstream LM evaluation. Prior to HELM, models on average were evaluated on just 17.9% of the core HELM scenarios, with some prominent models not sharing a single scenario in common. We improve this to 96.0%: now all 30 models have been densely benchmarked on a set of core scenarios and metrics under standardized conditions.

Our evaluation surfaces 25 top-level findings concerning the interplay between different scenarios, metrics, and models. For full transparency, we release all raw model prompts and completions publicly for further analysis, as well as a general modular toolkit for easily adding new scenarios, models, metrics, and prompting strategies. We intend for HELM to be a living benchmark for the community, continuously updated with new scenarios, metrics, and models.

One of the authors of this paper is Thomas Icard, associate professor of philosophy at Stanford. His work on the HELM project has been mainly in connection to evaluating the reasoning capabilities of LLMs. One of the things he emphasized about the project is that it aims to be an ongoing and democratic evaluative process: “it aspires to democratize the continual development of the suite of benchmark tasks. In other words, what’s reported in the paper is just a first attempt at isolating a broad array of important tasks of interest, and this is very much expected to grow and change as time goes on.”

Philosophical questions across a wide array of areas—philosophy of science, epistemology, logic, philosophy of mind, cognitive science, ethics, social and political philosophy, philosophy of law, aesthetics, philosophy of education, etc.—are raised by the development and use of language models and by efforts (like HELM) to understand them. There is a lot to work on here, philosophers. While some of you are already on it, it strikes me that there is a mismatch between, on the one hand, the social significance and philosophical fertility of the subject and, on the other hand, the amount of attention it is actually getting from philosophers. I’m curious what others think about that, and I invite philosophers who are working on these issues to share links to their writings and/or descriptions of their projects in the comments.

Originally appeared on Daily Nous Read More